Introduction

On this first edition of Big Data Congress, new trends, challenges and success cases have been presented to the public, giving an overall current view of Big Data. It has been very interesting seeing how different sectors strategically rely on the processing of considerable amount of data in order to extract relevant information so they can improve their decision making.

The congress, that has lasted two days, has had a first day focused on theorical aspects of Big Data while the second day was more focused on practical uses and tools.

Big Data Congress Day 1

The first day has started with examples on the application of Big Data to macro-statistics and on the definition of a roadmap to bring Big Data to an organization. People have also commented on organizations led by data, the different applications that Big Data has on the explotation of customer data or city management. The last event has been a panel discussion on the current challenges of Big Data.

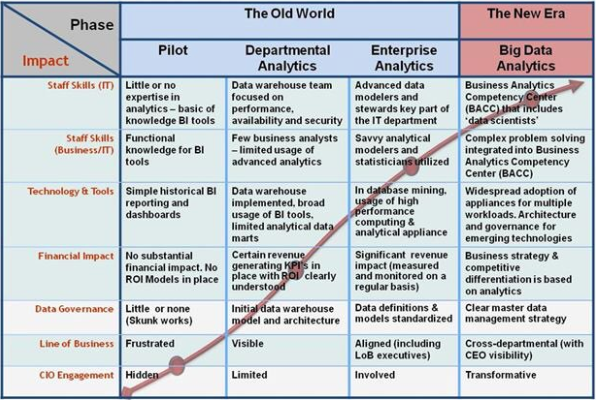

We specially highlight IDC’s roadmap, really interesting if you want to position a company regarding the use and management of its data when it comes to the benefits of Big Data.

A quite interesting discussion has been the size of data in order to be considered Big Data or Small Data. Marc Torrent’s conclusion has been the most convincing of them all (at least to us). The fact is that it does not matter if it is big or small data, the most important thing of all is that decisions are based on your data. Otherwise said, the volum of data has no importance because what really matters is the decision making of an organization based on data.

As all technologies, Big Data also has its own slogans. During Big Data Congress we have learned that Big Data is based on the V rule (at least in Spanish):

-

Great Volum of data

-

Variety of fonts and data formats

-

Processing Speed

-

Data Accuracy

-

The result has to be Valuable information

It seems that nearly any word starting with V is a valid attribute of Big Data...

It is important to highlight that the three main challenges of Big Data stress the immaturity of this technology. The first challenge is that, right now, each organization needs to plan and determine which kind of specific value can extract from its data. The second challenge refers to the management of this data on an obtention, storage and processing level. Finally, the third challenge is the lack of specialized staff.

Regarding the big questions about Big Data, different subjects have been discussed. As newcomers to the world of Big Data, the initial difficulties of defining the Big Data strategy for organizations have surprised us. That makes it clear that the best option for companies that want to take profit from their data right now is to rely on the advice of expert consultants if they want to assess the problem right from the beginning and avoid unproductive investments.

One of the other questions is How does privacy apply on Big Data? Is the right to privacy respected? Does the user know which data is he/she giving and why? All these questions have been debated and, again, we have concluded that the immaturity of this sector leaves all these aspects unspecified.

It seems that although theoretically they respect the law, each company applies its own privacy standards. Often after accepting the privacy policies of two applications of the same company, we do not realize that the intersection this information on Big Data applications can result in indirect violations of privacy. These types of cases are never contemplated when implementing policies.

Big Data Congress Day 2

The second day started with Ricardo Baeza-Yates pointing out the challenges of Big Data, but in this case on the web. After that, analytical models to improve business efficiency and reduce churn (users who stop using a service) were discussed. Innovation and industry startups have also been discussed. The last conference was given by the project managers of the Apache Flink project, a new Big Data tool.

The vast amount of data that companies with lots of users have to manage have really impressed us. These companies generate, daily, thousands of teras of data that later need to be analyzed using Big Data tools. Daniel Villatoro has presented the main mistakes that a Data Scientist is not allowed to do and, if you do not pay attention, are really likely to occur.

The way the whole knowledge extracted from Big Data communicates, sometimes trivial and sometimes complex, has been debated by Alberto González and Miguel Nieto from two different perspectives, from a macroeconomic and a social study and from way of capturing the different types of data into maps.

The introduction to the concept of Smart Data has also been interesting. Consisting in focusing the collection and analysis of data in order to obtain the highest possible value for the organization, and not just trying to massively accumulate data.

Oracle has presented its Business Intelligence and Analytics Big Data solution, meant to obtain value for organizations. This tool drastically diminishes developing and project integration time. A product that seems quite interesting, just like the modular philosophy of all the functionalities that it has available. Something that has not been interesting is the example where the Big Data platform had been used to ultimately deduce that small companies pay salaries to workers that have bank accounts in different banks.

Barcelona Supercomputing Center has presented Aloja, a project designed to facilitate the configuration of hundreds of parameters of Big Data tools through the feedback of the performance for each use case. In order to obtain good results, setting up a tool, in this case Hadoop, according to the machine on which it is being installed is not enough. We must also take into account external factors such as the algorism that is running, the origin and destination of the data, etc. As always, it comes to detect where is the bottleneck and optimize the settings to prevent them.

Apache Flink presentation has been good. It is a tool based on the continuous processing of a flow of data, in contraposition of the most popular tools that alternate the processing when obtaining the data. It seems that soon enough we will be able to think of this tool as a good alternative given the use case and, in fact, right now, it is being used in some production environments.

Conclusions

Big Data is a technology that it is stil on an Early Adopters stage. Right now, it lacks some standards and good practices are only on an individual or organization level. This does not mean that they cannot add value though: Big Data is nowadays an essential tool for lots of organizations that rely on it in order to optimize resources and investments.

The lack of qualified staff has also been commented continuously. Thinking about specializing in Big Data may be a great future investment if you want to find a good job.